Times are changing in the digital sphere, as the European Commission is taking action against manipulative site designs and other features that harm business competition and individual online safety.

In fact, the EC has already sent some 30 requests for additional information to various very large online platforms--“VLOPs” in EC parlance--and is studying their responses, ostensibly looking for violations of the Digital Services Act, which went live last weekend.

Among the VLOPs contacted are Apple, Booking.com, Facebook and Google Maps, confirms Deborah Behar, legal adviser in the EC’s directorate general for communication networks, content and technology. On 19 February, the EC additionally launched an investigation into Tiktok for suspected infringements.

“Competition must work to the benefit of the consumer, and this cannot be achieved in a market monopolised by only a few players,” commented , chair of the competition authority, at an event on 20 February. In Luxembourg, it is the competition authority that coordinates the DSA at the national level. Barthelmé added that the organisation, to this end, “has launched a digital service that will evolve over time to supervise the proper application of the new DSA and DMA rules.”

(“DMA” refers to the “Digital Markets Act,” a related regulation introduced alongside the DSA.)

The new (European) cybersphere

Everyone is familiar with the current norm: page after page of terms and conditions written in legalese that is, for all intents and purposes, impenetrable. This is among the things that will change under the DSA. From now on, such texts must be clear and comprehensible. The target audience must be taken into consideration too: sites aimed at minors must tailor the language even further to make sure their users understand it.

Next comes advertising. Why am I getting this ad in particular? Where does it come from? Platforms will have to explain--in an annual transparency report--the systems they use to determine which users see which ads. As part of the report, they will have to specify whether the moderation is done by algorithms or humans. Major platforms will also have to allow users to refuse targeted advertising, while--on all platforms--targeted advertising aimed at minors is prohibited.

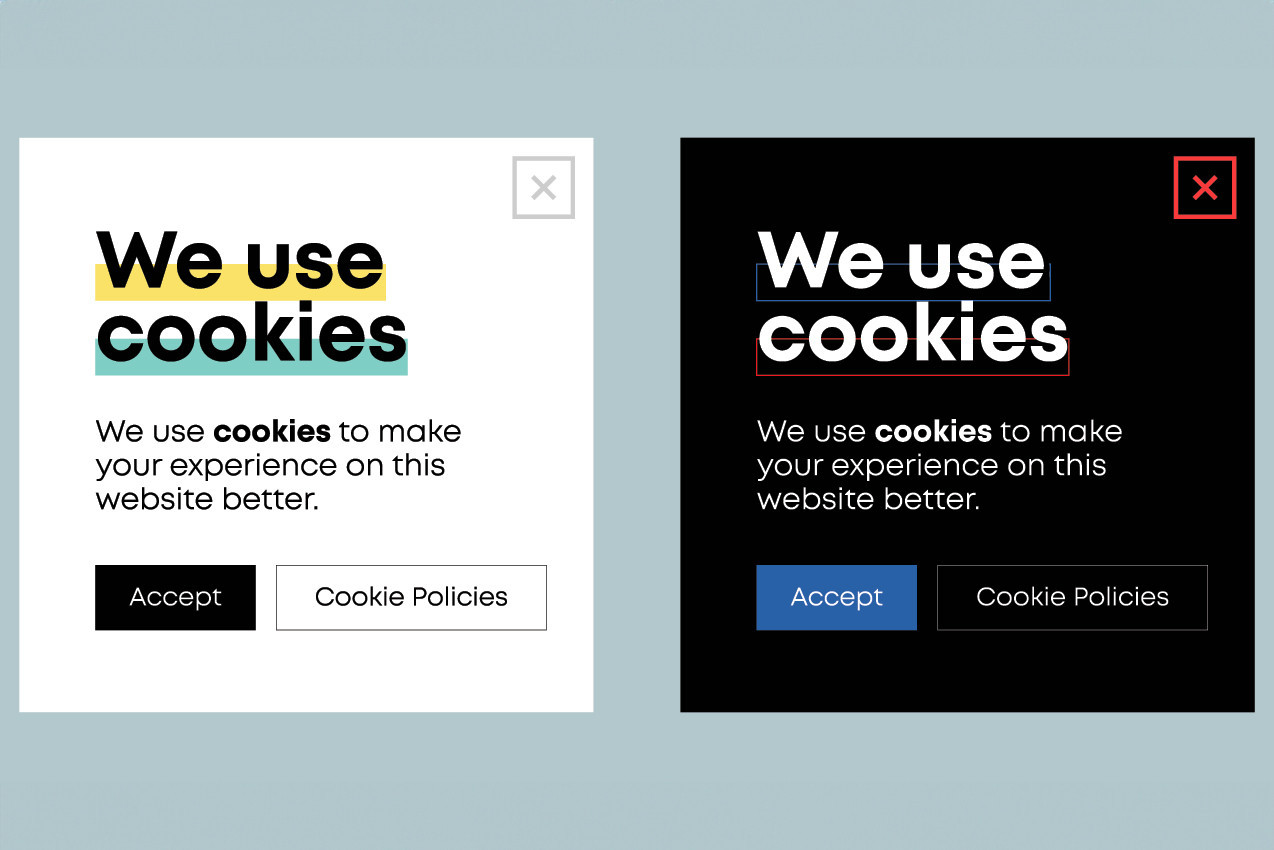

Next to go are “dark patterns,” or methods used by sites to influence which links you click. These generally take the form of a misleading interface or purposefully convoluted site design, for example making users navigate several steps and pages if they want to unsubscribe from a service. Another example are pop-up windows demanding that you approve the site’s use of cookies, but where the word “accept” is highlighted, enlarged or otherwise normalised--and any other option is less obvious, often hiding behind more ambiguous language (“confirm my choice”) and requiring you to click through multiple pages.

Many webpages are designed so that accepting cookies is an easier, clearer and faster process than refusing them. Photo: Shutterstock

Consumers also have the right to know, clearly, where the online product or service comes from; who is selling it; and what recourse is available in the event of an issue on the platform.

According to , partner at law firm Arendt & Medernach, it will soon become possible to bring class actions in consumer law. The relevant draft bill is currently being examined by the professional chambers, though the text will probably have to be revised (at least in part) following the opinion of the State Council, which issued several formal objections last year.

Finally, platforms must make dedicated forms available for reporting illegal content. In this area, Luxembourg’s competition authority has designated Bee Secure, a nonprofit devoted to empowering people (especially youngsters) to use the internet safely, as a trusted partner. Bee Secure’s “stopline” enables you to report illegal content like hate speech, racism, terrorism or sexual abuse. Managing director Aline Hartz strongly encourages citizens “to show their civil courage” by using . Once reported, the content will be removed if it is indeed illegal.

This article in Paperjam. It has been translated and edited for Delano.